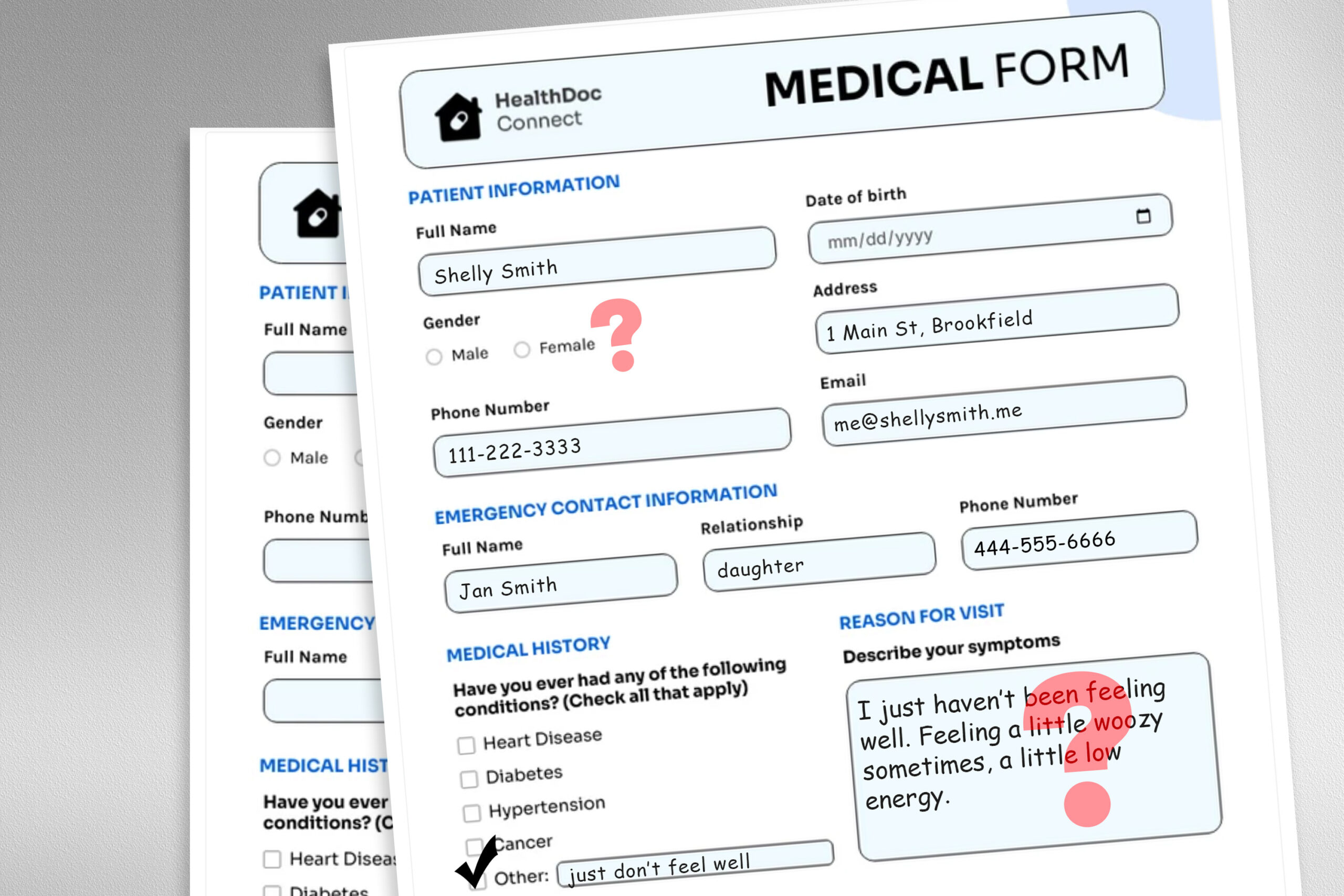

A large language model (LLM) deployed to make treatment recommendations can be tripped up by nonclinical information in patient messages, like typos, extra white space, missing gender markers, or the use of uncertain,…

Category: AI

-

Building Production-Ready Custom AI Agents for Enterprise Workflows with Monitoring, Orchestration, and Scalability

In this tutorial, we walk you through the design and implementation of a custom agent framework built on PyTorch and key Python tooling, ranging from web intelligence and data science modules to advanced code generators. We’ll…

Continue Reading

-

EmbodiedGen: A Scalable 3D World Generator for Realistic Embodied AI Simulations

The Challenge of Scaling 3D Environments in Embodied AI

Creating realistic and accurately scaled 3D environments is essential for training and evaluating embodied AI. However, current methods still rely on manually designed 3D…

Continue Reading

-

Google Researchers Release Magenta RealTime: An Open-Weight Model for Real-Time AI Music Generation

Google’s Magenta team has introduced Magenta RealTime (Magenta RT), an open-weight, real-time music generation model that brings unprecedented interactivity to generative audio. Licensed under Apache 2.0 and available on GitHub…

Continue Reading

-

DeepSeek Researchers Open-Sourced a Personal Project named ‘nano-vLLM’: A Lightweight vLLM Implementation Built from Scratch

The DeepSeek Researchers just released a super cool personal project named ‘nano-vLLM‘, a minimalistic and efficient implementation of the vLLM (virtual Large Language Model) engine, designed specifically for users who value…

Continue Reading

-

IBM’s MCP Gateway: A Unified FastAPI-Based Model Context Protocol Gateway for Next-Gen AI Toolchains

The development and deployment of advanced AI systems increasingly depend on flexible, robust orchestration layers that bridge diverse models, tools, and resources. IBM’s MCP Gateway addresses this need by providing a…

Continue Reading

-

Why Apple’s Critique of AI Reasoning Is Premature

The debate around the reasoning capabilities of Large Reasoning Models (LRMs) has been recently invigorated by two prominent yet conflicting papers: Apple’s “Illusion of Thinking” and Anthropic’s rebuttal titled “The…

Continue Reading

-

Texas A&M Researchers Introduce a Two-Phase Machine Learning Method Named ‘ShockCast’ for High-Speed Flow Simulation with Neural Temporal Re-Meshing

Challenges in Simulating High-Speed Flows with Neural Solvers

Modeling high-speed fluid flows, such as those in supersonic or hypersonic regimes, poses unique challenges due to the rapid changes associated with shock waves and…

Continue Reading

-

This AI Paper Introduces WINGS: A Dual-Learner Architecture to Prevent Text-Only Forgetting in Multimodal Large Language Models

Multimodal LLMs: Expanding Capabilities Across Text and Vision

Expanding large language models (LLMs) to handle multiple modalities, particularly images and text, has enabled the development of more interactive and intuitive AI…

Continue Reading

-

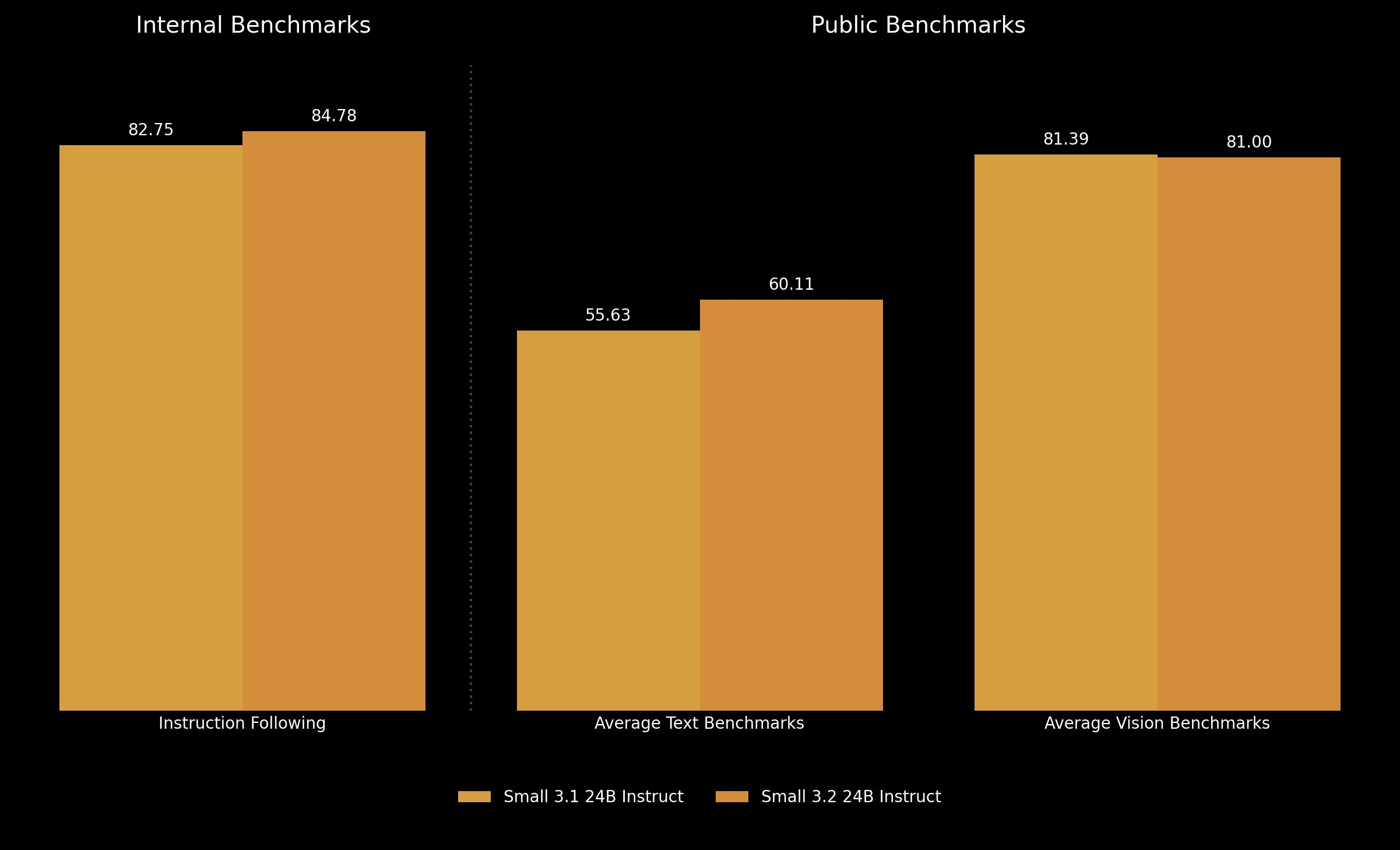

Mistral AI Releases Mistral Small 3.2: Enhanced Instruction Following, Reduced Repetition, and Stronger Function Calling for AI Integration

With the frequent release of new large language models (LLMs), there is a persistent quest to minimize repetitive errors, enhance robustness, and significantly improve user interactions. As AI models become integral to more…

Continue Reading