The Need for Cognitive and Adaptive Search Engines

Modern search systems are evolving rapidly as the demand for context-aware, adaptive information retrieval grows. With the increasing volume and complexity of user queries,…

Modern search systems are evolving rapidly as the demand for context-aware, adaptive information retrieval grows. With the increasing volume and complexity of user queries,…

A pro-Russia disinformation campaign is leveraging consumer artificial intelligence tools to fuel a “content explosion” focused on exacerbating existing tensions around global elections, Ukraine, and immigration, among other controversial…

As Mark Zuckerberg staffs up Meta’s new superintelligence lab, he’s offered top tier research talent pay packages of up to $300 million over four years, with more than $100 million in total compensation for the first year, WIRED has learned.

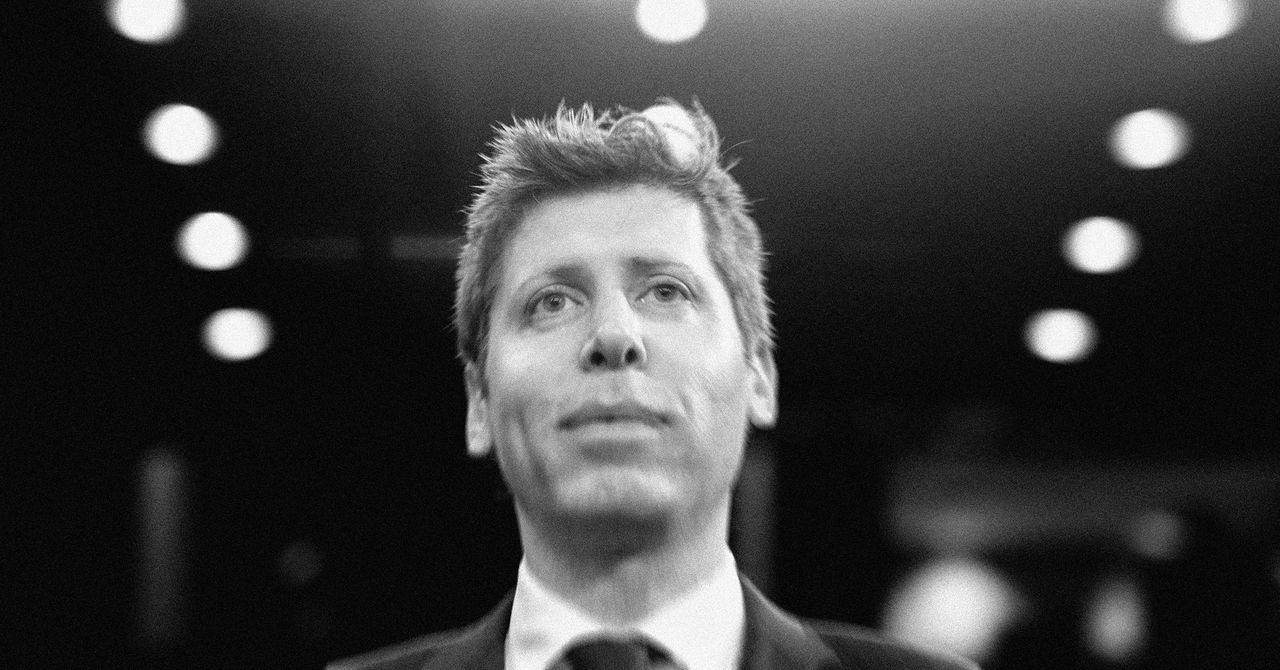

OpenAI CEO Sam Altman is hitting back at Meta CEO Mark Zuckerberg’s recent AI talent poaching spree. In a full-throated response sent to OpenAI researchers Monday evening and obtained by WIRED, Altman made his pitch for why staying at OpenAI is…

An AI-generated “bigfoot baddie,” with acrylic nails and a pink wig, speaks directly to her imaginary audience using an iPhone. “We might have to go on the run,” she says. “I’m wanted for a false report on my baby daddy.” This AI…

Baidu has officially open-sourced its latest ERNIE 4.5 series, a powerful family of foundation models designed for enhanced language understanding, reasoning, and generation. The release includes ten model variants ranging from…

Large-scale language models with long CoT reasoning, such as DeepSeek-R1, have shown good results on Olympiad-level mathematics. However, models trained through Supervised…

Last year, internet infrastructure firm Cloudflare launched tools enabling its customers to block AI scrapers. Today the company has taken its fight against permissionless scraping several steps further. It has switched to blocking AI crawlers by…

This post covers three main areas: • Why Mixture of Experts is Needed in Transformers • How Mixture of Experts Works • Implementation of MoE in Transformer Models The Mixture of Experts (MoE) concept was first introduced in 1991 by <a…

In this tutorial, we walk you through the seamless integration of AutoGen and Semantic Kernel with Google’s Gemini Flash model. We begin by setting up our GeminiWrapper and SemanticKernelGeminiPlugin classes to bridge the…