The development of large-scale language models (LLMs) has historically required centralized access to extensive datasets, many of which are sensitive, copyrighted, or governed by usage restrictions. This constraint severely limits…

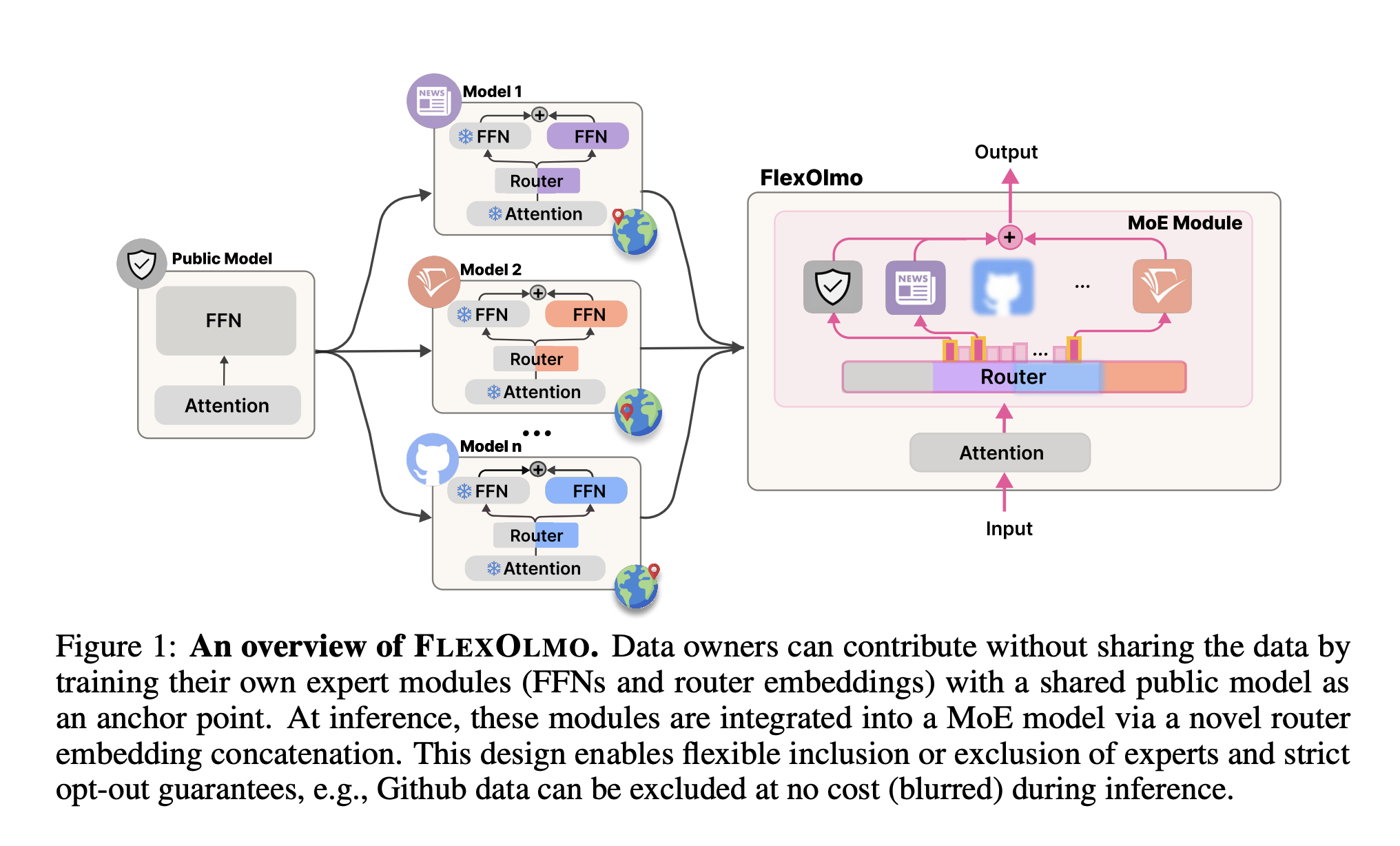

You Don’t Need to Share Data to Train a Language Model Anymore—FlexOlmo Demonstrates How