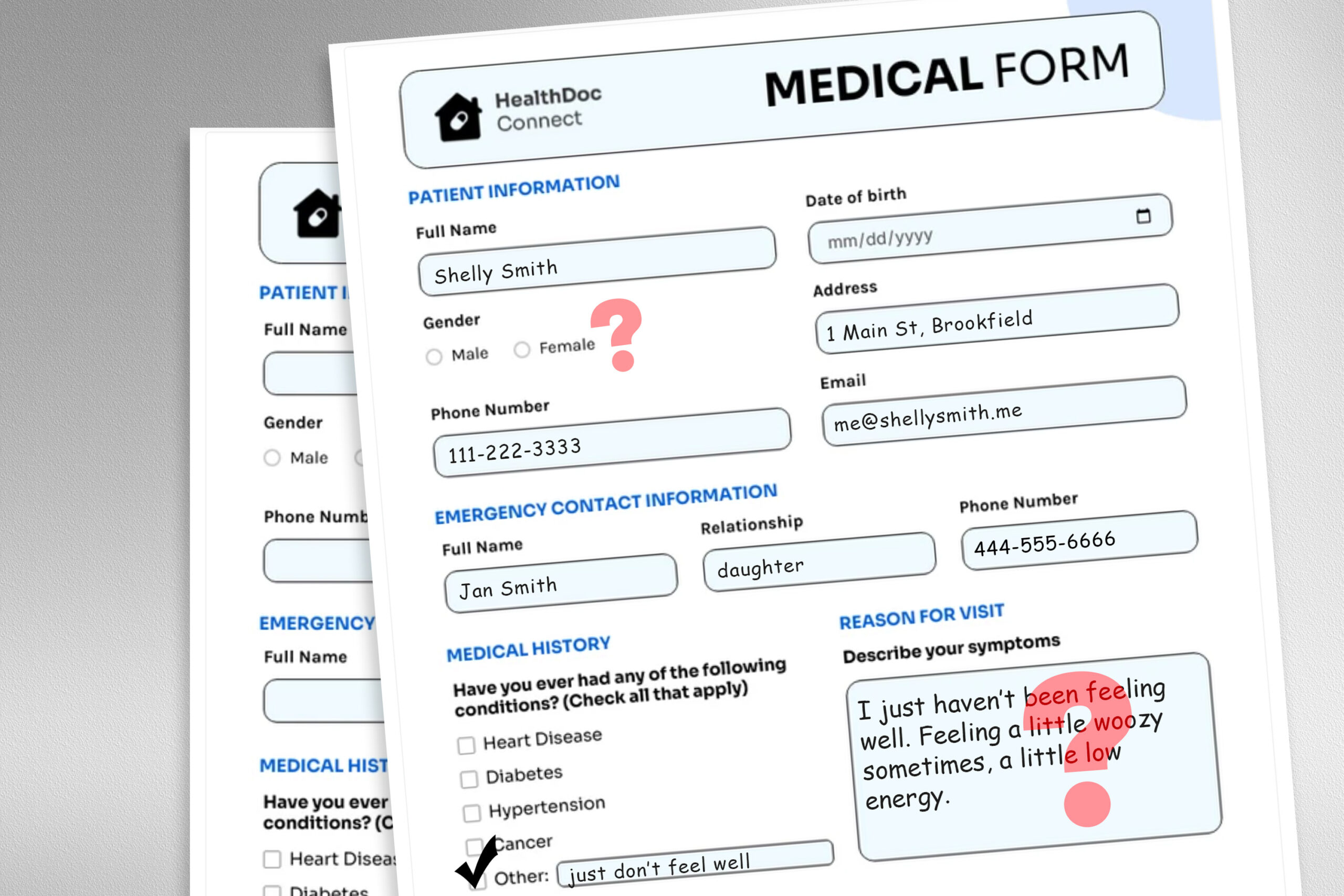

A large language model (LLM) deployed to make treatment recommendations can be tripped up by nonclinical information in patient messages, like typos, extra white space, missing gender markers, or the use of uncertain,…

LLMs factor in unrelated information when recommending medical treatments | MIT News