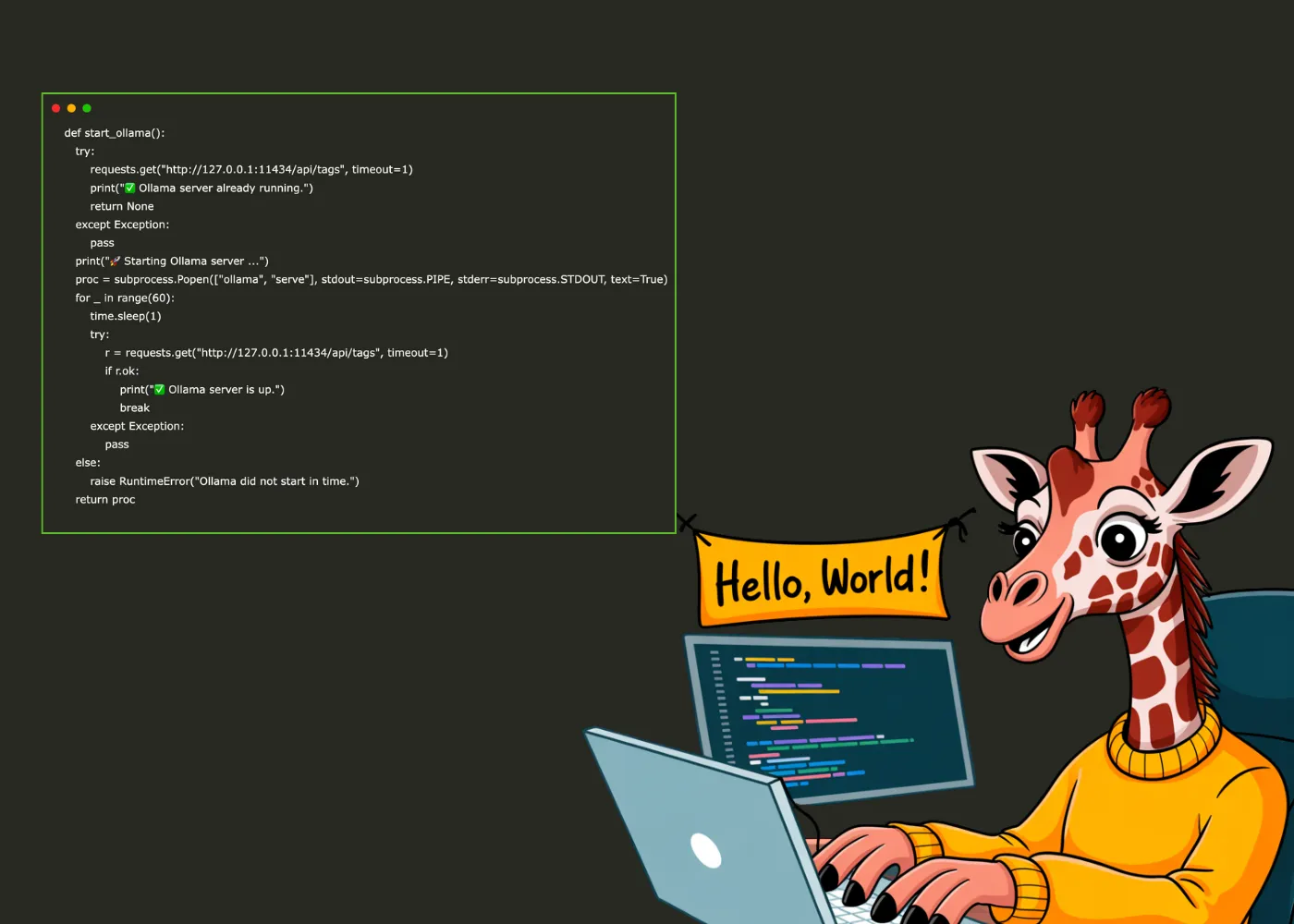

In this tutorial, we implement a fully functional Ollama environment inside Google Colab to replicate a self-hosted LLM workflow. We begin by installing Ollama directly on the Colab VM using the official Linux installer and then…

A Coding Implementation to Build a Complete Self-Hosted LLM Workflow with Ollama, REST API, and Gradio Chat Interface