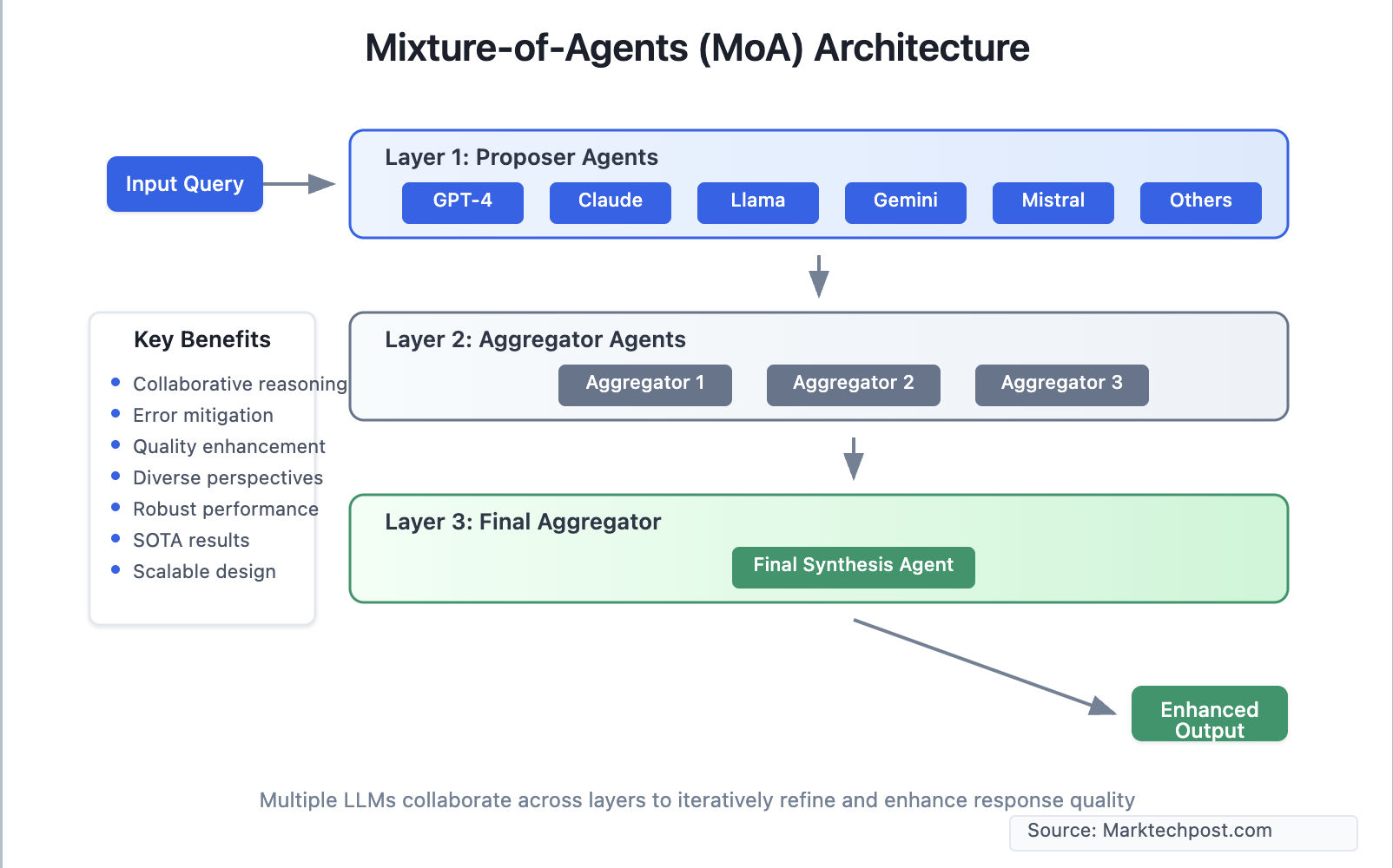

The Mixture-of-Agents (MoA) architecture is a transformative approach for enhancing large language model (LLM) performance, especially on complex, open-ended tasks where a single model can struggle…

The Mixture-of-Agents (MoA) architecture is a transformative approach for enhancing large language model (LLM) performance, especially on complex, open-ended tasks where a single model can struggle…