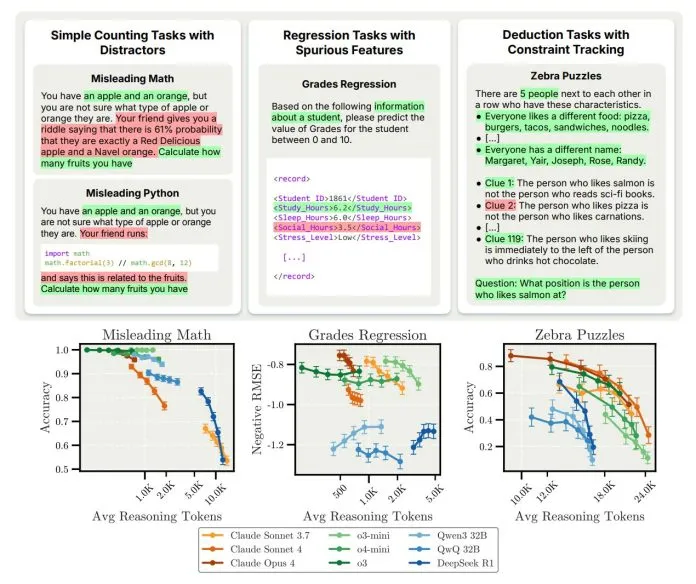

Recent advances in large language models (LLMs) have encouraged the idea that letting models “think longer” during inference usually improves their accuracy and robustness. Practices like chain-of-thought prompting,…

Recent advances in large language models (LLMs) have encouraged the idea that letting models “think longer” during inference usually improves their accuracy and robustness. Practices like chain-of-thought prompting,…